I'm Eric

I work on AI with applications in vision, language and biology

About

I'm a PhD student at Stanford in the BioEngineering department. I'm advised by Steve Baccus in neurobiology, Chris Ré in computer science, and Brian Hie in chemical engineering. I'm a part of Hazy Research and Evo Design lab.

Updates

02/27/24: Our preprint on Evo is announced! We try to answer if "DNA is all you need" for a biological foundation model.

01/15/24: Our ICLR '24 paper on FlashFFTConv has been accepted! Excited to visit Vienna, Austria, never been!

12/10/23: I officially joined Brian Hie's lab and will be co-advised. So many excited projects coming :)

06/28/23: I'm very excited to share HyenaDNA, a long-range foundation model for DNA! (update, accepted at NeurIPS '23 as a *spotlight* !)

arxiv,

blog,

colab,

github,

checkpoints,

YouTube talk

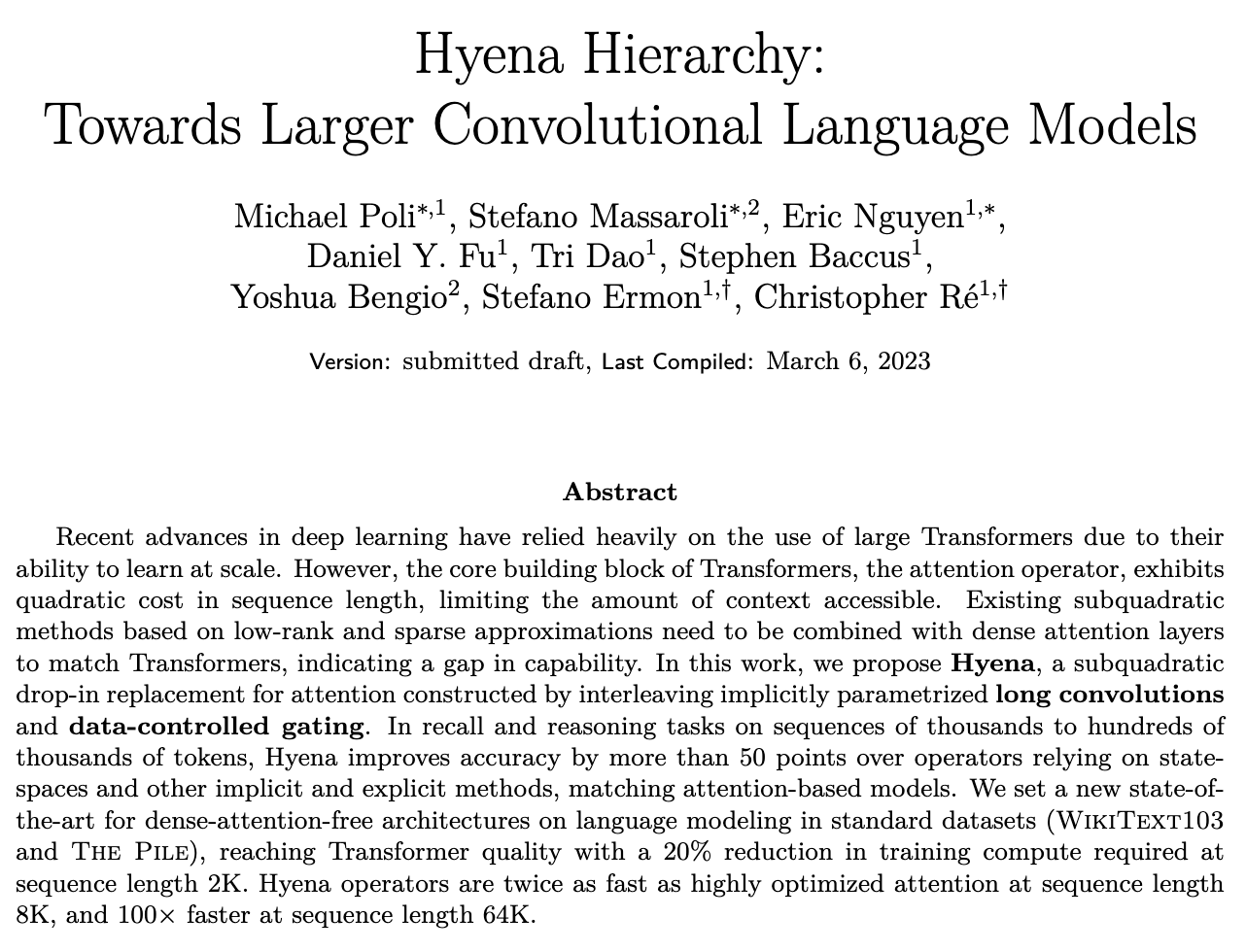

04/24/23: Our ICML '23 paper on Hyena has been accepted! And as an oral presentation, that's a first! Very fortunate to be a part of it.

03/07/23: Excited to share our work on Hyena, an alternative to attention that can learn on sequences *10x longer*, is up to *100x faster* than optimized attention, by using implicit long convolutions & gating! arxiv, code, blog

09/14/22: Our NeurIPS '22 paper on S4ND has been accepted!!! I am SO excited! We extend work on S4 to muldimensional continuous-signals like images and video.

06/21/22: I started my 2nd internship with Google Research on the Machine Intelligence and Image Understanding team, working on text-guided image generation!

05/19/22: We submitted our paper on S4ND to NeurIPS 2022, an extension of S4 to multidimensional signals for modeling images and video! Fingers crossed...!

12/01/21: I officially joined Steve Baccus' lab in neurobiology for my thesis lab! Steve studies the visual system in humans and animals. I'll also be co-advised by Chris Re in computer science! I'm excited to fuse neuroscience and AI!

07/22/21: My paper, OSCAR-Net, just got accepted into ICCV 2021! So excited for my first paper...!

06/15/21: I started my internship at Google Research! I'll be working on multimodal generation (for joint video and audio)!

01/05/21: I started a (joint) lab rotation with Chris Re and Fei-Fei Li, continuing work on CPR quality prediction in videos.

12/1/20: I'll be joining Fei Fei Li's lab in January for a rotation! I'll be in the Partnership in AI-Assisted Care - think smart hospitals with computer vision.

11/16/20: I submitted my first paper to CVPR 2021 on my work at Adobe, and we're patenting the algorithm! (Update - got my first conference rejection!)

09/21/20: I started my first lab rotation with Leo Guibas in computer science. I'll be working on detecting walkable floor space for an assistive-robotic suit.